Configuring application networks

This instruction aims to illustrate how to configure application networking using an example. See more details in:

- Site Networking configuration. Application networking below is dependant on associated configuration on the site.

- Application Networking

Application networking overview

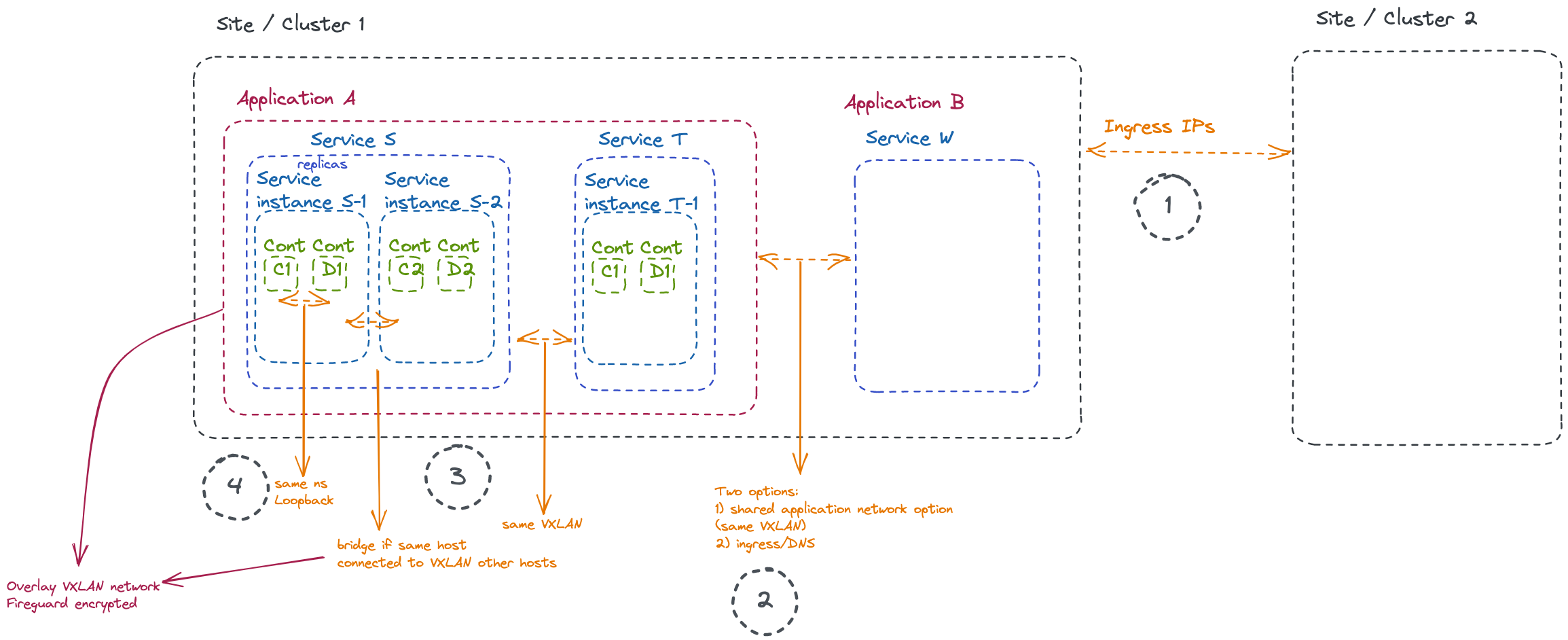

First of all, we can summarize the network between applications, services, and containers as below:

Key takeaways illustrated:

- Applications that want to communicate across sites can use ingress IPs

- Applications that want to communicate within a site have two options:

- Ingress IPs: with this option the applications are also available from the “outside”

- Shared network between applications

- Services within the same application are connected over the same VXLAN and can communicate directly (on the same host or across hosts on the site).

- Containers within a service instance are in the same network namespace, i.e. they can communicate over

localhost

Also remember that all applications run on separate isolated VXLAN networks.

An example

We will now illustrate the above with an example based on 4 popcorn applications, pop, pop2, pop3, and pop4. (You can use the one from the first tutorial with same container in all services).

They all are basically the same application:

name: pop<n>

services:

- name: pop

mode: replicated

replicas: 1

network:

outbound-access:

allow-all: true

containers:

- name: popcorn

image: registry.gitlab.com/avassa-public/movie-theaters-demo/kettle-popper-manager

In order to illustrate communication from the containers we have enabled all outgoing requests.

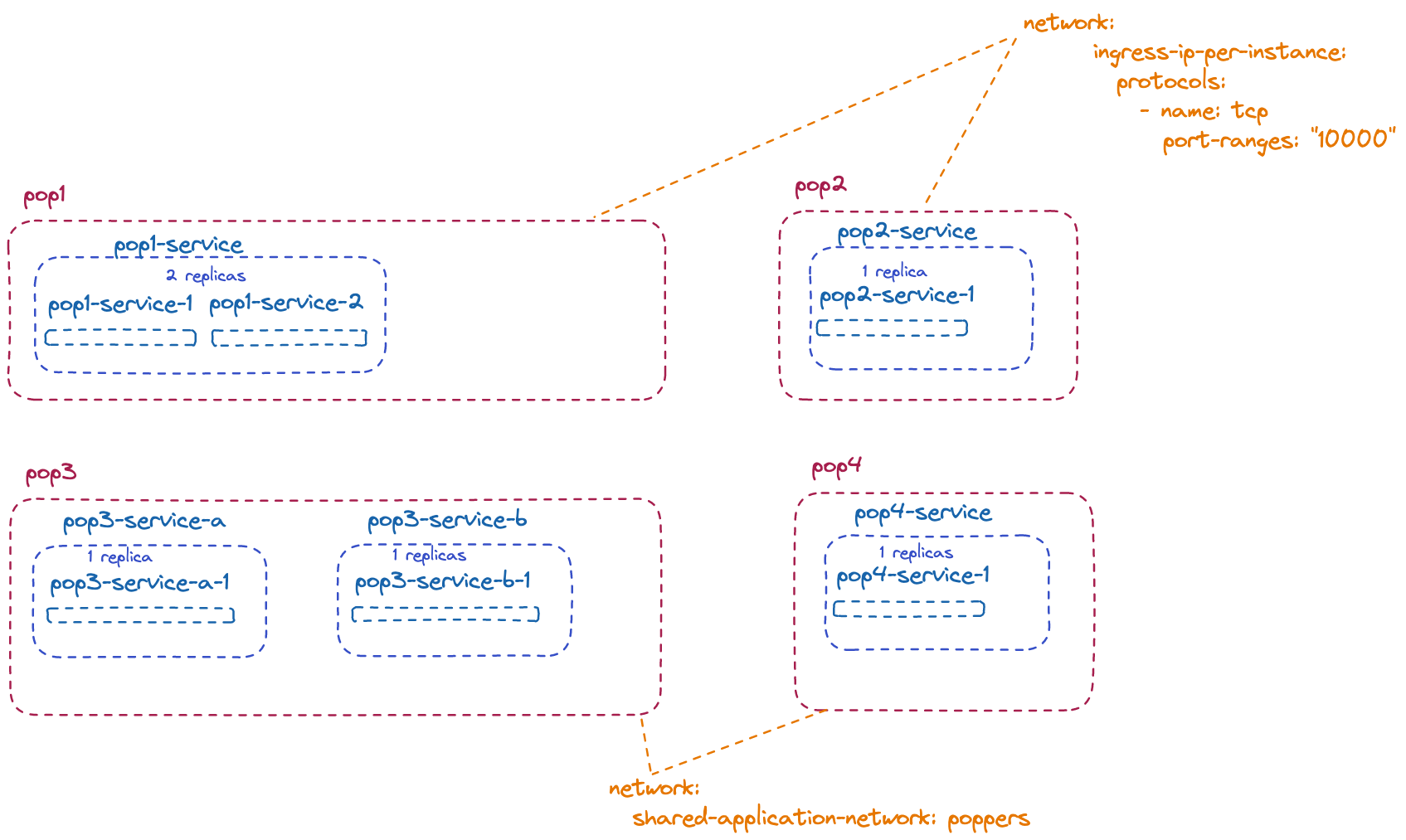

We have configured the applications with different options according to the illustration below:

- the pop1 service has two replicas, all the application services have only one replica.

- pop1 and pop2 applications have configured ingress addresses. (The site must be configured to allow for ingress IP addresses, pool or DHCP. You can also use port forwarding, which means that the services shares the host IP address and forwards the configured ports to the services.)

- pop3 has two services

- pop3 and pop4 have a shared application network and no ingress

Configuration of pop1

name: pop1

services:

- name: pop1-service

mode: replicated

replicas: 2

containers:

- name: pop

image: registry.gitlab.com/avassa-public/movie-theaters-demo/kettle-popper-manager

...

network:

ingress-ip-per-instance:

protocols:

- name: tcp

port-ranges: "10000"

outbound-access:

allow-all: true

TCP port 10 000 is just used as an example here to show protocol and port specifications

Configuration of pop4

name: pop4

services:

- name: pop4-service

mode: replicated

replicas: 1

containers:

- name: pop

image: registry.gitlab.com/avassa-public/movie-theaters-demo/kettle-popper-manager

...

network:

outbound-access:

allow-all: true

network:

shared-application-network: poppers

In this example, we have a

- tenant:

tenant-1 - Control Tower domain:

demo.acme.avassa.net - and a site:

site-1

After deployment, the application state will show the following network information on a site:

State of pop1

name: pop1

services:

- name: pop1-service

replicas: 2

share-pid-namespace: false

...

network:

ingress-ip-per-instance:

protocols:

- name: tcp

port-ranges: "10000"

outbound-access:

allow-all: true

...

service-instances:

- name: pop1-service-1

...

application-network:

ips:

- 172.30.0.2/16

dns-records:

- pop1-service-1.pop1.internal. 15 IN A 172.30.0.2

- pop1-service.pop1.internal. 15 IN A 172.30.0.2

gateway-network:

ips:

- 172.29.255.3/24

outbound-network-access:

inherited:

allow-all: true

from-application:

allow-all: true

combined:

allow-all: true

ingress:

ips:

- 192.168.72.102

dns-records:

- pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. 15 IN A

192.168.72.102

- pop1-service.pop1.tenant-1.site-1.demo.acme.avassa.net. 15 IN A

192.168.72.102

inbound-network-access:

inherited:

allow-all: true

from-application:

allow-all: true

combined:

allow-all: true

- name: pop1-service-2

Note that the services have internal and external ingress DNS entries. The internal DNS names can be used to communicate between replicas. Clients can use the external DNS names to reach the service. The Avassa Edge Enforcer manages the application and gateway networks; you do not have to bother about these. You will use the application and ingress DNS names. Whenever services communicate that goes through the application network. We will show this further down.

Each service has two interfaces for the gateway and application networks, for example in pop1-service-1, you can see that eth0 maps to the gateway address and eth1 to the application network.

/ # ip addr

1: lo:...

49: **eth0**@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 3a:7a:26:25:d3:40 brd ff:ff:ff:ff:ff:ff

inet **172.29.252.3/24** brd 172.29.252.255 scope global eth0

valid_lft forever preferred_lft forever

54: **eth1**@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1370 qdisc noqueue state UP qlen 1000

link/ether 42:25:58:d6:32:05 brd ff:ff:ff:ff:ff:ff

inet **172.30.0.2/16** brd 172.30.255.255 scope global eth1

valid_lft forever preferred_lft forever

Looking at pop3, which does not have an ingress configured:

name: pop3

...

service-instances:

- name: pop3-service-a-1

...

application-network:

shared-application-network: poppers

ips:

- 172.30.0.2/16

dns-records:

- pop3-service-a-1.pop3.internal. 15 IN A 172.30.0.2

- pop3-service-a.pop3.internal. 15 IN A 172.30.0.2

gateway-network:

ips:

- 172.29.253.2/24

outbound-network-access:

inherited:

allow-all: true

from-application:

allow-all: true

combined:

allow-all: true

ingress:

ips: []

...

- name: pop3-service-b-1

Since no ingress IP is configured, no external DNS entries are available.

After this setup, we have the following IP scheme: (you will see different names depending on your tenant and global Control Tower domain)

| Application: Service instance | Shared nw | ingress IP | ingress DNS | Application Network DNS |

|---|---|---|---|---|

| pop1:pop1-service-1 | 192.168.72.102 | pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. | pop1-service-1.pop1.internal. | |

| pop1:pop1-service-2 | 192.168.72.101 | pop1-service-2.pop1.tenant-1.site-1.demo.acme.avassa.net. | pop1-service-2.pop1.internal. | |

| pop2:pop2-service-1 | 192.168.72.100 | pop2-service-1.pop2.tenant-1.site-1.demo.acme.avassa.net. | pop2-service-1.pop2.internal. | |

| pop3:pop3-service-a-1 | poppers | - | - | pop3-service-a-1.pop3.internal. |

| pop3:pop3-service-b-1 | poppers | pop3-service-b-1.pop3.internal. | ||

| pop4:pop4-service-1 | poppers | - | - | pop4-service-1.pop4.internal. |

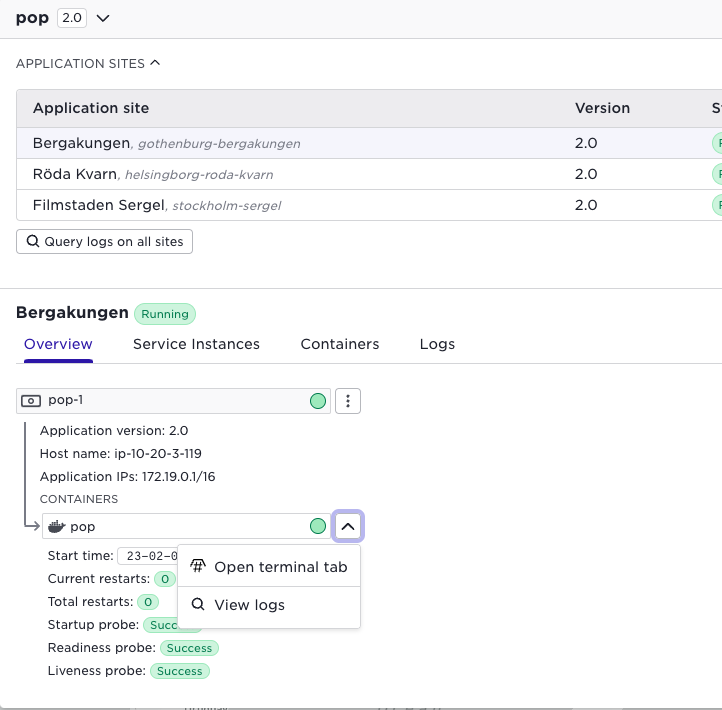

To build the table above, you can navigate to the “Service Instances” tab for an application on the site. DNS information is available by inspecting the YAML.

Or of course using supctl supctl show --site <site> applications <application>

You can see the default DNS patterns as explained in the DNS Fundamentals section:

- The internal names are

<SERVICE>-<INSTANCE-ID>.<APPLICATION>.internal. - The external names are

<SERVICE>-<INSTANCE-ID>.<APPLICATION>.<TENANT>.<SITE-NAME>.<GLOBAL-DOMAIN>.

In order to try connections between services you can reach an interactive shell terminal by navigating to a container in the UI:

We will now run a couple of pings to illustrate the networking. Each the following commands are run from within the first container

From pop1:pop1-service-1 to pop1:pop1-service-2 (service replica within the same service)

/ # ping pop1-service-2.pop1.internal.

PING pop1-service-2.pop1.internal. (172.30.0.1): 56 data bytes

64 bytes from 172.30.0.1: seq=0 ttl=64 time=0.496 ms

Since they are replicas within the same service they can communicate with internal DNS names.

You can also try the external DNS ingress:

/ # ping pop1-service-2.pop1.tenant-1.site-1.demo.acme.avassa.net.

PING pop1-service-2.pop1.tenant-1.site-1.demo.acme.avassa.net. (192.168.72.101): 56 data bytes

64 bytes from 192.168.72.101: seq=0 ttl=63 time=0.255 ms

From pop3:pop3-service-a-1 to pop3:pop3-service-b-1 (different services within the same application)

/ # ping pop3-service-b-1.pop3.internal.

PING pop3-service-b-1.pop3.internal. (172.30.0.1): 56 data bytes

64 bytes from 172.30.0.1: seq=0 ttl=64 time=0.451 ms

From pop1:pop1-service-2 to pop2:pop2-service-1 (service instances in different applications)

/ # ping pop2-service-1.pop2.internal.

ping: bad address 'pop2-service-1.pop2.internal.'

But again, external IP ingress will work fine:

/ # ping pop2-service-1.pop2.tenant-1.site-1.demo.acme.avassa.net.

PING pop2-service-1.pop2.tenant-1.site-1.demo.acme.avassa.net. (192.168.72.100): 56 data bytes

64 bytes from 192.168.72.100: seq=0 ttl=63 time=0.583 ms

From pop4:pop4-service-1 to pop3:pop3-service-a-1 (different applications but shared network)

/ # ping pop3-service-a-1.pop3.internal.

PING pop3-service-a-1.pop3.internal. (172.30.0.2): 56 data bytes

64 bytes from 172.30.0.2: seq=0 ttl=64 time=0.629 ms

And for illustration purposes, from pop4:pop4-service-1 to pop1:pop1-service-1 using ingress:

/ # ping pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net.

PING pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. (192.168.72.102): 56 data bytes

64 bytes from 192.168.72.102: seq=0 ttl=63 time=0.621 ms

If we login to the host where the applications are running and perform a nslookup command we can see that on the host DNS we resolve the names:

nslookup pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. localhost

Server: localhost

Address: 127.0.0.1#53

Name: pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net

Address: 192.168.72.102

Debug network access issues

In the example above the application specification has requested full access to the network, both

for the outbound connections originating from the application's services (by means of

allow-all: true configuration statement under outbound-access) and for the inbound

connections from external endpoints to the application's services (allowed by default). However,

it might happen that the network access is restricted by a site provider. In this case even if the

application specification requests full access, it may only get a restricted access.

Suppose the ping operation from the example above failed:

/ # ping pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net.

PING pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. (192.168.72.102): 56 data bytes

--- pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. ping statistics ---

10 packets transmitted, 0 packets received, 100% packet loss

In order to identify such situation one may look at a service instance state once the application is deployed:

supctl show --site site-1 applications pop4 service-instances pop4-service-1

service-instances:

- name: pop4-service-1

...

gateway-network:

ips:

- 172.29.255.4/24

outbound-network-access:

inherited:

default-action: deny

rules:

- 192.168.73.0/24: allow

from-application:

allow-all: true

combined:

default-action: deny

rules:

- 192.168.73.0/24: allow

Here we can see that even though the application has requested full access to the network

(application rules is allow-all: true), the inherited rules only allow access to

192.168.73.0/24 network which prevents access to 192.168.72.102 address. This means that

one of the ancestor tenants has assigned a set of rules restricting the outbound network access.

Our next step would be to ask a representative of a site provider tenant to take a look at the

rules. In order to identify the rules that apply to a specific application they could invoke

a debug action on the outbound-network-access of the same service instance, using their own site

provider credentials:

supctl do --site site-1 tenants tenant-1 applications pop4 service-instances pop4-service-1 \

gateway-network outbound-network-access debug

inherited:

allow-all: true

assigned:

tenant: tenant-1

source: assigned-site-profile

profile-name: restricted-network

default-action: deny

rules:

192.168.73.0/24: allow

from-application:

allow-all: true

combined:

default-action: deny

rules:

192.168.73.0/24: allow

Here, the site provider can see that tenant-1 has been assigned the

resource-profile restricted-network on site site-1 which only allows

outbound connections to 192.168.73.0/24. For the site provider tenant itself

the network access is unrestricted in this case (inherited rules are

allow-all: true), otherwise they would have to ask their parent tenant to run

the same debug procedure using their credentials.

Now, suppose that the site provider decides that this was just a typo and

updates the outbound-network-access specification in the resource profile

called restricted-network to also allow access to 192.168.72.0/24.

Once the site provider updates the resource-profile, or updates a reference to the resource-profile that defines network access, the change is applied immediately to all affected running applications. This behaviour is different from other resource types, e.g. allocated CPU and memory, which require the application to be upgraded before the change is applied.

The application owner tenant may verify that the new network access has been applied to the application:

service-instances:

- name: pop4-service-1

...

gateway-network:

ips:

- 172.29.255.4/24

outbound-network-access:

inherited:

default-action: deny

rules:

- 192.168.72.0/24: allow

- 192.168.73.0/24: allow

from-application:

allow-all: true

combined:

default-action: deny

rules:

- 192.168.72.0/24: allow

- 192.168.73.0/24: allow

In many cases the connectivity to the pop1's ingress address would have been

restored and the ping command above would have worked now. However, for the

sake of this debugging example suppose it still fails.

The application owner may then inspect the service instance state of the target

service (pop1-service-1) to try to identify any issues with the ingress.

supctl show --site site-1 applications pop1 service-instances pop1-service-1

service-instances:

- name: pop1-service-1

...

ingress:

ips:

- 192.168.72.102

inbound-network-access:

inherited:

default-action: deny

rules:

- 192.168.71.0/24: allow

from-application:

allow-all: true

combined:

default-action: deny

rules:

- 192.168.71.0/24: allow

Here, we can see that only connections from 192.168.71.0/24 are allowed. As application owner

we don't really know which addressing scheme is used on this site, but we can ask site provider

to double-check this configuration.

The site provider knows that the hosts on site-1 should use addresses within 192.168.72.0/24

network, so the connections from these hosts to pop1-service's ingress address would indeed

not be allowed. In order to identify the resource-profile in effect for this application the

site provider may run the debug action on the service instance's inbound-network-access:

supctl do --site site-1 tenants tenant-1 applications pop1 service-instances pop1-service-1 \

ingress inbound-network-access debug

inherited:

allow-all: true

assigned:

tenant: tenant-1

source: assigned-site-profile

profile-name: restricted-network

default-action: deny

rules:

192.168.71.0/24: allow

from-application:

allow-all: true

combined:

default-action: deny

rules:

192.168.71.0/24: allow

The site provider can see that the profile called restricted-network is in

effect for this service instance. The site provider may then decide to update

the inbound-network-access specification in this profile to allow access from

192.168.72.0/24 addresses.

Now, with both changes in place the connectivity is restored and the ping

command is successful.

/ # ping pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net.

PING pop1-service-1.pop1.tenant-1.site-1.demo.acme.avassa.net. (192.168.72.102): 56 data bytes

64 bytes from 192.168.72.102: seq=0 ttl=63 time=0.621 ms

Host networking

Avassa also supports so called “host networking”. In some rare use-cases the default network operation mode with service attached to gateway and application networks does not meet the networking requirements of an application. One example of such cases is when Layer 2-connectivity to the host's physical network is required. Host networking bypasses the isolation described above.

Host networking is configured in the following way:

name: popcorn

services:

name: popper-service

...

network:

host: true

The tenant needs to be granted service-host-network-mode capability. In many cases it makes sense to also open for container-net-admin which gives the tenant full read/write access to the host’s network interfaces. The example below shows how to configure policies accordingly:

supctl create policy policies <<EOF

name: extended-networking

capabilities:

container-net-admin: allow

service-host-network-mode: allow

EOF

supctl merge tenants popcorn-systems <<EOF

policies:

- extended-networking

EOF

Note that the host networking option is not compatible with user namespaces, so user namespace remapping must either be disabled at install time on the host or disabled per container. This does not apply to Podman installations.

You can read more on host networking in the networking fundamentals section.

Using the Avassa APIs from your application

From an application, the API endpoint for the local Edge Enforcer is always reachable at https://api.internal:4646

For example:

curl -k https://api.internal:4646/v1/get-api-ca-cert

{

"cert": "-----BEGIN CERTIFICATE-----\nMIICGjCCAcCgAwIBAgITAMcKTGWXv9QWdwiPY22okEzynTAKBggqhkjOPQQDAjBj\nMRgwFgYDVQQDEw9BdmFzc2EgQVBJIHJvb3QxEjAQBgNVBAcTCVN0b2NraG9sbTEL\nMAkGA1UEBhMCU0UxDzANBgNVBAoTBkF2YXNzYTEVMBMGA1UECxMMZGlzdHJpYnV0\naW9uMCIYDzIwMjIxMDE2MDgyMDAwWhgPMjAyNjAyMjQxMTU2MDBaMGMxGDAWBgNV\nBAMTD0F2YXNzYSBBUEkgcm9vdDESMBAGA1UEBxMJU3RvY2tob2xtMQswCQYDVQQG\nEwJTRTEPMA0GA1UEChMGQXZhc3NhMRUwEwYDVQQLEwxkaXN0cmlidXRpb24wWTAT\nBgcqhkjOPQIBBggqhkjOPQMBBwNCAAS5fS3k8w5oMTn1GrB+n5FToUS2zA46C/sA\n3qtwNka+HQsmft1zr1uOso2vKDSzA7mKwPXM9cRoSm0OJU8jhDjio08wTTAOBgNV\nHQ8BAf8EBAMCAYYwEgYDVR0TAQH/BAgwBgEB/wIBATAnBgNVHR8EIDAeMBygGqAY\nhhZodHRwOi8vY3JsLmF2YXNzYS5uZXQvMAoGCCqGSM49BAMCA0gAMEUCIQDyqo+7\nZxa+XDNSp6gvBIeqK4Z5tG+TFSagOU98ps617AIge06tFmwq4hRrgblW3zkpeaVa\nP8OskfzSLaWcJa7Rbo4=\n-----END CERTIFICATE-----"

}

Summary of the application networks data model

This section gives an overview of the application data model related to networking. For detailed information see the reference manual

-

application

-

network

- shared-application-network: all applications with the same shared application name are connected to the same isolated network and can talk among each other.

-

services (ingress-ip-per-instance and host are mutually exclusive)

-

ingress-ip-per-instance: this will result on ingress IP addresses for all service instances

-

protocols: Only traffic to these ports will be allowed for the given protocol.

-

inbound-access: allowed ingress traffic (source addresses): allow-all, deny-all, default-action and rules

-

match-interface-labels: allocate the ingress address on on the interface selected by the match expression. In this way you can control which interfaces are used for ingress.

-

-

dns-records: A list of records that should be added when this service instance is

ready. (In addition to the ones described above) -

host: this enables host networking for the service. This should be used with care since the service gets access to all networking on the host and the Avassa network infrastructure is not set up. Use this only if you are in full control of the host.

-

outbound-access: rules for outbound communication for the service: allow-all, deny-all, default-action and rules

-

-

site-dns-records: Extra DNS records that should be added to the name servers on this site as soon as this application is started

-

A complete example of application network configuration is shown below:

network:

ingress-ip-per-instance:

protocols:

- name: tcp

port-ranges: "9000"

match-interface-labels: movie-theater-owner.com/external

dns-records:

domains:

- domain: default

srv:

- name: _http._tcp

priority: 0

port: 80

outbound-access:

default-action: allow

rules:

192.0.2.0/24: deny

site-dns-records:

domains:

- domain: default

srv:

- name: _http._tcp

priority: 1

port: 80

weight: 100

target: nginx.web.acme.foo.bar.com

cname:

- name: www