Application networking

Introduction

Networking is one of the most important means of input/output for an edge application allowing it to communicate using standard IP-based protocols. The Avassa solution both enables such communication among the services running as part of the same application within a site and provides access to wide-area network (WAN) or other external networks. It is also possible for a service acting as a server to require an ingress address reachable from external networks.

Security is an important aspect of networking. Application private networks are isolated from each other in Avassa and the traffic on the private network is encrypted when it leaves the host boundaries, because the physical network between the hosts can not assumed to be trusted. External network access is guarded by a firewall running on each host. By default no service can be contacted from external networks, unless it requires an ingress address in which case incoming connections are limited to a list of allowed ports and protocols.

Intra-service communication

When talking about networking, the smallest part of application of interest is the service instance. A service instance may consist of several containers and these containers run in the same linux network namespace, sharing the same TCP/IP stack. Communication between containers that are part of the same service instance is thus trivial by means of loopback interface.

Application network

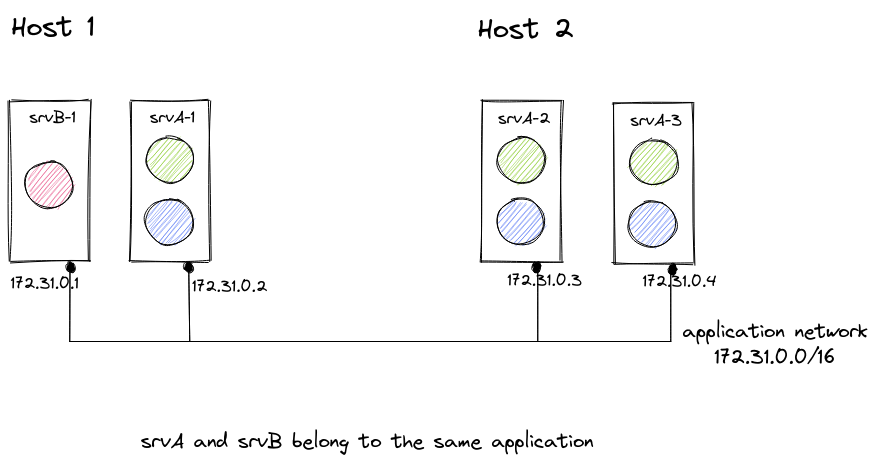

Within one site an application consists of one or several services, each service running in a single or multiple instances. The service instances may be scheduled on the same or different hosts within the site.

The Avassa solution automatically connects all service instances running on the same site and belonging to the same application to an overlay network, called the application network. This means that the service instances can communicate to each other seamlessly regardless of whether they are running on the same or different hosts.

Service instances running on the same host belonging to the same application are connected to a bridge residing in an application-specific linux network namespace. This bridge is in turn connected to a VXLAN bridge spanning all hosts within the site that have at least one service instance belonging to this application deployed.

Using VXLAN helps achieve isolation between different applications. However, it cannot be assumed that the physical network between the hosts in the site is trusted, nor can it assumed to be private. Hence, in order to further protect the private communication between the services instances within an application an encrypted overlay network based on WireGuard is set up between the hosts within a site. The VXLAN traffic goes on the Wiregard network to protect it from external eavesdropping or interference.

In order to facilitate service discovery, the IP addresses of the

service instances are registered in a local DNS server, configured by

default in all containers. Each service instance is registered under

<service name>-<instance id>.<application>.internal name in DNS, and

is resolvable for all applications that share the same application

network. More details about how DNS works can be found in the DNS chapter.

In certain cases an application owner may want different applications to

communicate with each other in the same way the service instances communicate

within one application. For example, this may be appropriate when an

application is structured so that different components have different

lifecycles and hence each component is defined as a separate application. It

is possible to configure an application to be connected to a

shared-application-network with a chosen identifier. All of the service

instances belonging to applications with the same identifier for

shared-application-network are connected to the same application site

network. Such network is however always scoped to a single application owner,

so it is not possible to communicate with applications run by a different owner

in this way.

Unless some service is acting as a router, the application network is an isolated network. Avassa platform does not route any traffic to or from this network.

Gateway network

In order to access both Avassa APIs and external networks, each service instance has a second network interface connected to a gateway network. A stateful firewall disallows any incoming connections on this network, unless an ingress IP address is assigned to the service instance in which case incoming connections are only allowed on specified list of ports on the ingress IP. This means that by default only connections initiated by the service instance are allowed on the gateway network.

The gateway network are host-local, i.e., two hosts' gateway networks are not connected. Technically, communication with other service instances within the same application on the same host is possible, although it is intended that the application network is used for this purpose.

Currently only IPv4 is supported on the gateway network. If the

destination is in the external network, then the service instance's gateway

network address is transformed by NAT masquerading into the address of

the host running the service instance. The address that the service

instance should use to access Avassa APIs is registered in the local

DNS server under name api.internal.

Bandwidth available for an application when accessing external networks can be limited on a per-host basis in order to prevent a single application from slowing down other applications. This means that the total bandwidth available for all service instances belonging to the same application on one host is limited to a configured value. This is achieved by configuring Hierarchical Fair Service Curve (HFSC) queueing discipline on all external interfaces (to control upstream bandwidth) and all application virtual interfaces (to control downstream bandwidth) on the host machine, with different applications' network traffic assigned to different HFSC classes.

As a side effect, HFSC ensures that in case the upstream link becomes saturated (so that the host is forced to drop packets), then the available bandwidth is distributed fairly between different applications. However, this is only true when the packets are dropped by the host itself. If the bottleneck is in some other point in the network and the packets are dropped by other networking equipment, for example a busy router, then the host is unaware of the dropping decision and consequently is not able to balance the available bandwidth between different applications.

Outbound access

By default all outbound connections on the gateway network are prohibited,

unless otherwise specified for a service in an application specification. The

exception is the Avassa API address (referred to by api hostname inside the

service). In order to allow a service to initiate connections to external

networks one may specify either a list of destination networks in form of an

access list, or request allowing all connections regardless of destination.

This is done on a per-service basis in the application specification.

The outbound access is treated like resource and can be restricted by a site provider for its subtenant, see the resource management tutorial . This means that the effective outbound access is the intersection of the outbound access rules configured by the ancestor tenants and the access requested by the application specification.

Note that this limitation does not affect the communication between the services on an application network. Such communication is always unrestricted. Another case when the network access control does not apply is when an application is attached to the host's default network namespace .

Also, it is worth noting that the incoming connections (via an ingress IP address) are restricted by a separate set of rules, defined in the corresponding IP address specification in the application specification. In other words the rules for outbound access do not have effect on connections initiated outside the service.

Ingress IP

As mentioned in section Gateway network, by default only outgoing connections are allowed from service instances. However, in many cases an edge application provides some service to clients outside of Avassa system. This means it needs to be able to accept connections from external networks.

In order to do that an application specification may specify that an ingress IP address needs to be provided for all instances of one or more services. The specification must also contain the list of transport layer protocols along with port numbers the service should be reachable on. This information is used to properly configure the firewall. All connection attempts to ports outside the configured ranges are disallowed.

An ingress IP address is exclusive to a service instance. It means that the network packets arriving to a particular ingress IP address will always be forwarded to only one service instance at a given point in time, unless they are dropped by the firewall. If an ingress IP is specified for a service that runs in multiple instances, then each instance gets its own separate ingress IP address. However, if a service instance is stopped, then the ingress IP address is released and depending on ingress IP address allocation method may be reused for another application.

Traffic that arrives to the ingress IP address is forwarded to the service instance's gateway network address.

Just like the outbound traffic can be restricted, the access for the inbound connections to the ingress address can be restricted in a similar way. However, in contrast with outbound access, the inbound access is unrestricted by default. Just like outbound access, inbound access may both be requested in the application specification and restricted by a site provider using network access rules.

It should be noted that an ingress IP address is also used as a source IP address for outgoing connections that are routed through the interface the ingress address is assigned to. The connections initiated by the service instance routed to leave the host via any other interface are masqueraded with the host's IP address for this interface.

The Avassa solution is able to use different methods for allocation of ingress IP addresses, which is configured by the site provider. See tutorial on how to configure an ingress allocation method for a site. Available methods are:

- DHCP allocation. A site provider operates a DHCP server with support for DHCP client ID feature, and Avassa solution manages the DHCP clients for different service instances.

- Configured IP address pool available for allocation. A pool may be configured either per site (simplified configuration when all of the hosts within a single site are in the same LAN segment), or separate pools per network interface on each host. The allocation is done by Avassa solution internally.

- Port forwarding on the host's IP address. This ingress method allows to re-use the host's primary IP address and share it among multiple services running on the same host, provided that disjoint port sets are requested to be forwarded by different services.

- Allocation by external script. Avassa solution invokes a script provided by the site provider at both allocation and deallocation time. This is an experimental feature.

Attaching to host's default network namespace

In some rare use-cases the default network operation mode with service attached to gateway and application networks does not meet the networking requirements of an application. One example of such cases is when Layer 2-connectivity to the host's physical network is required.

In order to meet the requirements of such cases there is a possibility to attach

an application's service to the host's default network namespace, so called

host networking. This is a very powerful option that should be used with

extreme care. A tenant needs to be granted service-host-network-mode

capability before this mode can be specified in an application specification

in order to prevent this powerful functionality from being used by mistake.

It should be noted that this functionality greatly reduces the isolation between the applications by giving the tenant the ability to, for example, send and eavesdrop on the traffic on all network interfaces in the host's default namespaces. Because the host acts as a router for all service instances scheduled to it, this means that this capability gives the tenant read access to all other tenants applications traffic.

It becomes even more powerful when combined with container-net-admin. This

combination of capabilities gives the tenant full read/write access to the

host's network interfaces, the routing table, the firewall and other parts of

the networking stack allowing such container to, for example, redirect or

intercept the network traffic destined to other tenants' applications or supd

itself. It also gives the application the power to break the connectivity with

the host in case of a mistake or malign action.

Because the capability is granted system-wide, the best practice is to make sure that a tenant who is allowed to attach to host's default network namespace is only assigned to sites that are exclusively dedicated to this tenant, in order to exclude the possibility of a possible malfunction of an application attached to the host's network to have negative effect on other tenants' applications.

When running a service attached to the host's default namespace it is strongly

recommended to avoid interfering with the system firewall configuration. This

also means that the use of iptables in the host's default namespace is not

allowed, because iptables may conflict with the nftables firewall used by

supd. It is also recommended to avoid manipulating the primary IP address,

because it may break the intra site connectivity.

It must be noted that due to a limitation of Docker the host network mode

cannot be used together with user namespaces. This means that the Docker

daemon must be configured to disable user namespace remapping. See the

section in Add your first site tutorial

on

how to ensure the user namespaces are disabled in Docker daemon configuration

when installing supd.

Inter-site communication

Currently, the Avassa solution does not provide any specific functionality related to communication between applications running in different sites. If network connectivity is required, the suggested solution is to have an ingress IP address allocated to a service instance on one or both sites and to establish communication using this ingress IP address.

If there is no need for a traditional socket connectivity, the application may use Volga, an Avassa publish-subscribe service, to send messages.