Control Tower On-Premises Installation

Introduction

This document describes all the steps needed to install a Control Tower on-premises. In short, you must prepare one or more Linux hosts for the Control Tower. Download and configure the Control Tower components (backend and UI). At the end, this document also contains information on monitoring and operations of the Control Tower cluster.

It is possible to install the Control Tower on a single host, but for full redundancy we recommend installing it on at least three hosts.

Preparation

supd is the name of the Control Tower (and Edge Enforcer) image.

Prerequisites

- One or more Linux hosts for the Control Tower, see host sizing below.

- Common distro choices are Ubuntu Server 22.04, Debian 11/12, RHEL 9.x

- Install on each host

- Docker CE.

- jq

- NTP time synchronization is configured.

- Avassa will supply an image registry for the Avassa software, make sure this registry is reachable from where the Control Tower is hosted.

DNS

A Control Tower setup requires DNS with the following names:

ct.example.com(UI)api.ct.example.com(API)registry.ct.example.com(Image registry)volga.ct.example.com(Volga)

Where ct.example.com must match your domain.

Tenant and admin user

You must choose a tenant name, see tenant creation and an initial admin user, see create initial admin user.

Host sizing

We recommend at least 10Gb of storage on top of the operating system, note that this does not include any storage for application images.

8Gb of RAM will allow for thousands of sites.

Directory structure and backup

/etc/supd/supd.conf- configuration/var/lib/supd- state directory/sbin/start-supd- start script/etc/systemd/system/supd.service- systemd service unit file

It is recommended to backup these directories periodically.

Installation

This section describes the installation for each host in the Control Tower cluster.

Configure Docker

Configure the following in /etc/docker/daemon.json

{

"iptables": false

}

systemctl restart docker

Flush all Docker iptables rules

iptables -F

iptables -X

iptables -F -t nat

iptables -P FORWARD ACCEPT

The supd image

The installation assumes that the supd image is available on the host. The supd image will be provided by Avassa.

supd.conf

Now prepare the initial supd.conf that is needed to start the Control Tower.

This step is crucial to get right, you need:

- A unique host id per host in the cluster (can be any string, use

uuidgenif you want a random string) - The hostname of the host (run

hostnamein a shell to verify). - For each host configure its IP address

- Make sure you update

domain

mkdir /etc/supd

host-id: fc6182bf-b267-4f58-84e2-a7c8d709af4e

initial-site-config:

top-site-config:

name: control-tower

domain: ct.example.com

hosts:

- hostname: ip-10-0-0-10

controller: true

ip-addresses: [10.0.0.10]

- hostname: ip-10-0-1-10

controller: true

ip-addresses: [10.0.1.10]

- hostname: ip-10-0-2-10

controller: true

ip-addresses: [10.0.2.10]

Initial certificate

The Control Tower hosts need certificates for the initial setup, as they will be replaced by the system itself once started it is okay to use self-signed certs. Here is an example script to generate a self-signed CA and distribution certs:

# create CA certificate, 1826 days = 5 years

openssl req -x509 -new -nodes -newkey rsa:4096 -keyout dist-ca.key -sha256 -days 1826 -out dist-ca.pem -subj '/CN=Avassa Demo CA/C=SE/ST=Stockholm/L=Stockholm/O=Avassa'

Copy the CA and key to the correct directory (make sure /var/lib/supd/state exists) on each host.

mkdir -p /var/lib/supd/state

cp dist-ca.pem /var/lib/supd/state/dist-ca.pem

cp dist-ca.key /var/lib/supd/state/dist-ca.key

The same certificate must be copied to all the hosts.

Extract files from the supd image

In this step we will extract systemd unit files and the script that starts supd and put them in the right location.

id=$(docker create avassa/supd:current)

docker cp "$id:/supd/bin/install-supd" /tmp/install-supd

docker cp "$id:/supd/bin/supctl" /usr/local/bin

docker rm -v $id

# Get start-supd

/tmp/install-supd --emit-start-supd > /sbin/start-supd

# Make start-supd executable

chmod +x /sbin/start-supd

# Extract systemd unit

/tmp/install-supd --emit-systemd-service > /etc/systemd/system/supd.service

Enable the service and start it

systemctl enable supd

systemctl start supd

After a short while you should see the supd container:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ee0ff197b7e 8f76259e14fb "/docker-entrypoint.…" 4 hours ago Up 4 hours supd

Initial Setup

The initial setup steps below are run once on any of the hosts in the cluster.

Configure /etc/hosts

Add the following to /etc/hosts:

127.0.0.1 api

This will make the supctl interaction below a bit simpler

Reveal the seal key

# Where to store tokens, see below

export SUPCTL_PROFILE_DIR=$HOME/supctl

export SUPCTL_PORT=4646

supctl -k --host=api do strongbox system reveal-sealkey

{

"sealkey": "v7NDixgQHMoohynZ60JEajkVyP5OUKPHnb2LRM2zR+Q=",

"accessor": "b2589583-172b-461a-89a4-b640624eb1c2",

"token": "c54e3fe1-13a3-4ae0-8ddb-1e5af033cb43",

"creation-time": "2023-08-17T14:55:41.046065Z",

"shares": [

"1:LZf41vu53kz+jCVNsLMwoM7pUpdQv+LYPDAldFZfMEw=",

"2:8JI9XZLZyhIvguQWUVvHJoSAgmxkQ6o+QizPfxLewB8=",

"3:YraGAHFwCJT5ieiCCqqz7HN8GAV6rOsh46FhT4kyt7c=",

"4:5Ejrl0i9jGTEwd2Vss/uwXv/zdpCEacTBOAXQ5nz7e0=",

"5:dmxQyqsUTuISytEB6T6aC4wDV7Nc/uYMpW25cwIfmkU="

]

}

These keys are the only way to unlock the Control Tower if the hosts are rebooted or the supd container is restarted.

Update the cert used by supctl (will get rid of cert warnings).

supctl -k do get-api-ca-cert > /dev/null

Create the initial tenant

This assumes that the tenant is called example-inc.

supctl -S create tenants <<EOF

name: example-inc

kind: site-provider

EOF

Then create the root token:

supctl -S do strongbox token create-root --name example-inc

Warning: Providing --name on the command line is insecure. Consider using --name-prompt instead.

{

"accessor": "fd8fccc7-eac8-4542-9c76-f7161eba2d11",

"token": "bd72399e-2365-4650-97a2-c6872ae65f12",

"creation-time": "2023-08-17T15:00:49.187899Z"

}

These keys are the only way to access the Control Tower if you lose the credentials created below.

The above payload is also stored in $HOME/supctl/tenant.

Create initial admin user

Please feel free to choose a different name than admin@my-domain.test.

supctl -r create strongbox authentication userpass <<EOF

name: fred@example.com

password: secret-password

distribute:

to: all

token-ttl: 14d

token-policies:

- user

- root

EOF

Verify access

supctl do login fred@example.com

{

"token": "460213e3-a851-4069-a34e-462bef28829f",

"expires-in": 1209600,

"expires": "2023-09-04T08:45:34.241128Z",

"accessor": "bc3f7ab7-6b48-4bc1-9409-649f9de4bbd1",

"creation-time": "2023-08-21T08:45:34.241128Z",

"renewal-time": "2023-08-21T08:45:34.241128Z",

"totp-required": false,

"totp-enabled": false

}

supctl show system sites

- name: control-tower

type: top

domain: control-tower.api.ct.example.com

labels:

system/type: top

system/name: control-tower

quarantined: false

allow-local-unseal: false

inter-cluster-address:

ip:

- 10.0.0.10

ingress-allocation-method: disabled

hosts:

- host-id: 969418c3-80a3-400c-a173-946293c048f0

controller: true

cluster-hostname: control-tower-001

hostname: ct-1

supd-version: dev-9b9127fe

smbios:

chassis-type: "1"

chassis-vendor: QEMU

chassis-version: pc-q35-6.2

product-name: Standard PC (Q35 + ICH9, 2009)

product-uuid: 969418c3-80a3-400c-a173-946293c048f0

product-version: pc-q35-6.2

platform:

hostname: ct-1

architecture: x86_64

total-memory: 4009492 KiB

vcpus: 2

operating-system: Debian GNU/Linux 12 (bookworm)

kernel-version: 6.1.0-9-amd64

docker:

version: 24.0.5

api-version: "1.43"

os: linux

arch: amd64

git-commit: a61e2b4

components:

- name: Engine

version: 24.0.5

- name: containerd

version: 1.6.22

- name: runc

version: 1.1.8

- name: docker-init

version: 0.19.0

...

Configure how the sites will reach the Control Tower

supctl -r merge system sites control-tower <<EOF

inter-cluster-address:

dnsname: api.ct.example.com

EOF

The dnsname must match what is in DNS and what the sites will connect to.

Persist supctl settings

Add the following lines to e.g. $HOME/.bashrc to automatically set them on login (this is optional but makes it much easier to use supctl).

export SUPCTL_PROFILE_DIR=$HOME/supctl

export SUPCTL_PORT=4646

# Add supctl completion

source <(supctl completion)

Configure port forwarding for the UI

To allow access to the UI, you must enable port forwarding.

Change ingress-allocation-method to port-forward.

supctl -r merge system sites control-tower <<EOF

ingress-allocation-method: port-forward

EOF

Hide Control Tower in the UI

Unless this is set, the Control Tower will show up as a site in the UI (rarely what you want).

supctl -S create platform-settings <<EOF

system-owned-sites:

- control-tower

EOF

UI installation and supd/UI upgrades

To enable automatic upgrades of the Control Tower and the UI component.

Registry and credentials must match your environment. This setup will also install the UI.

Configure the registry credentials. If your registry doesn't require credentials, this step can be skipped.

supctl -S replace strongbox vaults avassa-registry <<EOF

name: avassa-registry

distribute:

to: none

EOF

supctl -S replace strongbox vaults avassa-registry secrets credentials <<EOF

name: credentials

data:

token: <replace me>

EOF

Configure the system for automatic updates:

supctl -S replace system update <<EOF

registry:

address: <replace me with registry received from Avassa>

port: 443

authentication: strongbox

check:

interval: 30m

randomize: true

images:

supd:

repository: avassa/supd

tag: new

keep: 3

ui:

ui-repository: control-tower/ui

tag: latest

keep: 3

EOF

Verify that the UI is started. Note it may take a couple of minutes for the UI to be installed.

supctl -S show applications avassa-ui --fields name,oper-status

name: avassa-ui

oper-status: running

Maintenance and Operations

Manual unseal

If all Control Tower nodes are restarted at the same time, the decryption keys (only held in memory) are lost. Therefore you must manually unseal the Control Tower if this happens.

supctl do strongbox system unseal –sealkey v7NDixgQHMoohynZ60JEajkVyP5OUKPHnb2LRM2zR+Q=

Note v7ND... is the value from when revealing the seal key above.

Control Tower Operations

This task implies responsibility for making sure that the Control Tower runs well. It often corresponds to normal Linux admin activities, like checking disk space, system load etc. This could be performed by using your preferred choice of IT monitoring tools as well as Avassa built-in host metrics. In some cases, there might be a need to investigate Avassa application issues. Avassa provides command line tools to drill down and inspect issues. Proactive monitoring of specific Control Tower alerts and logs should be automated.

Avassa continuously releases updates to the Control Tower (and Edge Enforcer) software, and it is the responsibility of the customer to set up an automated pipeline to receive new updates from Avassa. Avassa will provide access to a container registry where updates can be fetched. The customer needs to make these available to the Control Tower (for example through hosting a container registry in a DMZ, or an air-gapped solution) - details will be defined in the delivery project.

Edge Site Operations

Control Tower performs basic monitoring of the infrastructure on the sites: hosts, disk, CPU load and edge cluster health. We recommend that the customer adds their standard tools for monitoring the hosts.

AWS Automated installation

Overview

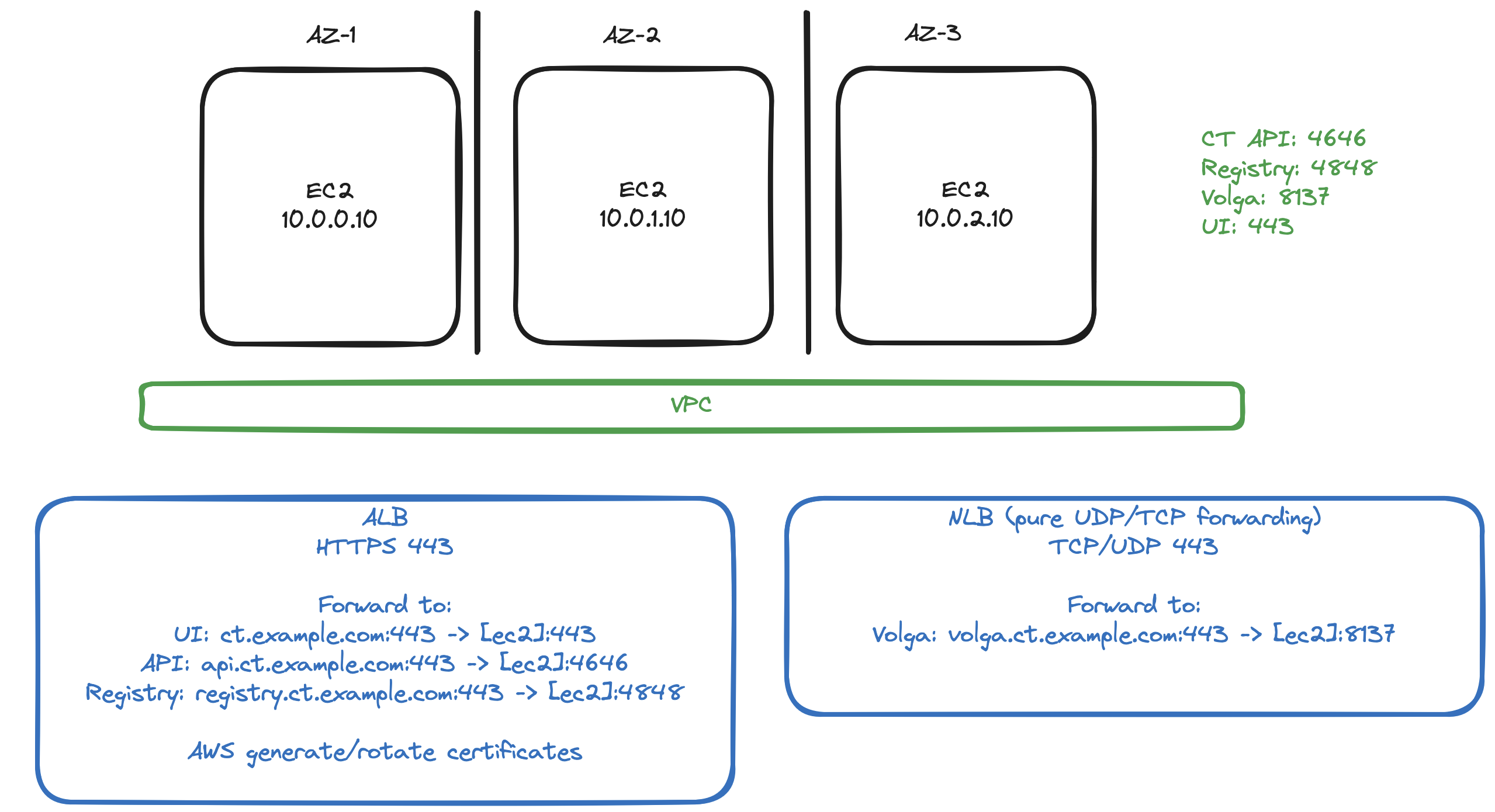

The Terraform/OpenTofu script below will set up three ec2 instances in AWS, including an Application Loadbalancer for UI, API and registry. A Network Loadbalancer will be setup for Volga traffic.

Most of the infrastructure bringup can be automated in AWS using the following terraform/OpenTofu script.

The script does not setup an AWS CA nor make changes your public DNS.

The CA should be configured to create certificates for:

ct.example.com(UI)api.ct.example.com(API)registry.ct.example.com(Image registry)volga.ct.example.com(Volga)

The DNS should be configured (CNAME) to resolve the following to the ALB

ct.example.comapi.ct.example.comregistry.ct.example.com

The DNS should be configured (CNAME) to resolve the following to the NLB

volga.ct.example.com

Terraform/OpenTofu

The script assumes the following variables set

ssh_pub_key = "Public ssh key to access instances"

# Make sure you change this to your domain

ct_domain = "ct.example.com"

# Certificate ARN, used by the ALB to generate HTTPS certs

cert_arn = "arn:aws:acm:eu-north-1:000000000:certificate/00000000=0000=0000=aaaa=000000000000"

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~>5"

}

}

}

provider "aws" {

# Please change these to fit your setup

region = "eu-north-1"

profile = "avassa-se"

default_tags {

tags = {

project = "ct-on-prem"

}

}

}

variable "ct_domain" {

type = string

}

# Note, remove this throughout the script if ssh access is not needed

variable "ssh_pub_key" {

type = string

}

variable "instance_type" {

type = string

default = "c7i.large"

}

variable "availability_zones" {

default = [

"eu-north-1a",

"eu-north-1b",

"eu-north-1c"

]

}

variable "ct_ips" {

default = ["10.0.0.10", "10.0.1.10", "10.0.2.10"]

}

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

}

resource "aws_subnet" "ct_subnets" {

count = length(var.availability_zones)

vpc_id = aws_vpc.main.id

cidr_block = cidrsubnet("10.0.0.0/16", 8, count.index)

availability_zone = var.availability_zones[count.index]

tags = {

Name = "ct-subnet-${count.index}"

}

}

# Create an Internet Gateway

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.main.id

}

# Create a Route Table

resource "aws_route_table" "public" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

}

resource "aws_route_table_association" "rt-assoc" {

count = length(var.availability_zones)

subnet_id = aws_subnet.ct_subnets[count.index].id

route_table_id = aws_route_table.public.id

}

# Security Groups

resource "aws_security_group" "instance_sg" {

name = "ct_instances"

description = "Traffic between CT instances"

vpc_id = aws_vpc.main.id

}

resource "aws_security_group" "nlb_sg" {

name = "ct_nlb"

description = "NLB for volga"

vpc_id = aws_vpc.main.id

}

resource "aws_security_group" "alb_sg" {

name = "ct_alb"

description = "ALB for UI, API and registry"

vpc_id = aws_vpc.main.id

}

# Security Group Rules for CT Instances

resource "aws_security_group_rule" "tcp_volga_nlb" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.nlb_sg.id

protocol = "tcp"

from_port = 8137

to_port = 8137

}

resource "aws_security_group_rule" "udp_volga_nlb" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.nlb_sg.id

protocol = "udp"

from_port = 8137

to_port = 8137

}

# Needed if integration with an external DNS server

#resource "aws_security_group_rule" "dns_access" {

# type = "ingress"

# security_group_id = aws_security_group.instance_sg.id

# cidr_blocks = ["0.0.0.0/0"]

# protocol = "udp"

# from_port = 53

# to_port = 53

#}

resource "aws_security_group_rule" "ui_from_alb" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.alb_sg.id

protocol = "tcp"

from_port = 443

to_port = 443

}

resource "aws_security_group_rule" "api_from_alb" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.alb_sg.id

protocol = "tcp"

from_port = 4646

to_port = 4646

}

resource "aws_security_group_rule" "registry_from_alb" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.alb_sg.id

protocol = "tcp"

from_port = 4848

to_port = 4848

}

resource "aws_security_group_rule" "internal_tcp" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "tcp"

from_port = 4668

to_port = 4668

}

resource "aws_security_group_rule" "allow_all_outbound" {

type = "egress"

security_group_id = aws_security_group.instance_sg.id

cidr_blocks = ["0.0.0.0/0"]

protocol = "-1"

from_port = 0

to_port = 0

}

resource "aws_security_group_rule" "ssh_access" {

type = "ingress"

security_group_id = aws_security_group.instance_sg.id

# This should be tightened in a prod setup

cidr_blocks = ["0.0.0.0/0"]

protocol = "tcp"

from_port = 22

to_port = 22

}

# ALB Rules

resource "aws_security_group_rule" "alb_https_internet" {

type = "ingress"

security_group_id = aws_security_group.alb_sg.id

cidr_blocks = ["0.0.0.0/0"]

protocol = "tcp"

from_port = 443

to_port = 443

}

resource "aws_security_group_rule" "alb_to_ui" {

type = "egress"

security_group_id = aws_security_group.alb_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "tcp"

from_port = 443

to_port = 443

}

resource "aws_security_group_rule" "alb_to_api" {

type = "egress"

security_group_id = aws_security_group.alb_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "tcp"

from_port = 4646

to_port = 4646

}

resource "aws_security_group_rule" "alb_to_registry" {

type = "egress"

security_group_id = aws_security_group.alb_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "tcp"

from_port = 4848

to_port = 4848

}

# NLB Rules

resource "aws_security_group_rule" "nlb_udp_volga" {

type = "ingress"

security_group_id = aws_security_group.nlb_sg.id

cidr_blocks = ["0.0.0.0/0"]

protocol = "udp"

from_port = 443

to_port = 443

}

resource "aws_security_group_rule" "nlb_tcp_volga" {

type = "ingress"

security_group_id = aws_security_group.nlb_sg.id

cidr_blocks = ["0.0.0.0/0"]

protocol = "tcp"

from_port = 443

to_port = 443

}

resource "aws_security_group_rule" "nlb_udp_to_instance" {

type = "egress"

security_group_id = aws_security_group.nlb_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "udp"

from_port = 8137

to_port = 8137

}

resource "aws_security_group_rule" "nlb_tcp_to_instance" {

type = "egress"

security_group_id = aws_security_group.nlb_sg.id

source_security_group_id = aws_security_group.instance_sg.id

protocol = "tcp"

from_port = 8137

to_port = 8137

}

resource "aws_key_pair" "ssh_key" {

key_name = "ct-on-prem"

public_key = var.ssh_pub_key

}

data "aws_ami" "debian" {

most_recent = true

filter {

name = "name"

values = ["debian-12*"]

}

filter {

name = "architecture"

values = ["x86_64"]

}

owners = ["136693071363"]

}

# Create instances

resource "aws_instance" "ct" {

count = length(var.availability_zones)

instance_type = "${var.instance_type}"

ami = data.aws_ami.debian.id

key_name = "ct-on-prem"

vpc_security_group_ids = [aws_security_group.instance_sg.id]

subnet_id = aws_subnet.ct_subnets[count.index].id

private_ip = var.ct_ips[count.index]

associate_public_ip_address = true

root_block_device {

volume_size = 64

encrypted=true

}

}

output "public_ips" {

value = [ for i in aws_instance.ct: i.public_ip ]

}

# NLB

resource "aws_lb_target_group" "volga" {

name = "volga-tg"

port = 8137

protocol = "TCP_UDP"

target_type = "ip"

vpc_id = aws_vpc.main.id

}

resource "aws_lb" "nlb" {

name = "ct-nlb"

internal = false

load_balancer_type = "network"

security_groups = [aws_security_group.nlb_sg.id]

subnets = [for subnet in aws_subnet.ct_subnets : subnet.id]

}

resource "aws_lb_target_group_attachment" "volga" {

count = length(var.availability_zones)

target_group_arn = aws_lb_target_group.volga.arn

target_id = var.ct_ips[count.index]

port = 8137

}

resource "aws_lb_listener" "volga" {

load_balancer_arn = aws_lb.nlb.arn

port = "443"

protocol = "TCP_UDP"

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.volga.arn

}

}

# ALB

resource "aws_lb_target_group" "api" {

name = "api-tg"

port = 4646

protocol = "HTTPS"

target_type = "ip"

vpc_id = aws_vpc.main.id

health_check {

protocol = "HTTPS"

healthy_threshold = 2

path = "/healthz"

}

}

resource "aws_lb_target_group_attachment" "api" {

count = length(var.availability_zones)

target_group_arn = aws_lb_target_group.api.arn

target_id = var.ct_ips[count.index]

port = 4646

}

resource "aws_lb_target_group" "registry" {

name = "registry-tg"

port = 4848

protocol = "HTTPS"

target_type = "ip"

vpc_id = aws_vpc.main.id

health_check {

protocol = "HTTPS"

healthy_threshold = 2

path = "/healthz"

}

}

resource "aws_lb_target_group_attachment" "registry" {

count = length(var.availability_zones)

target_group_arn = aws_lb_target_group.registry.arn

target_id = var.ct_ips[count.index]

port = 4848

}

resource "aws_lb_target_group" "ui" {

name = "ui-tg"

port = 443

protocol = "HTTPS"

target_type = "ip"

vpc_id = aws_vpc.main.id

health_check {

protocol = "HTTPS"

healthy_threshold = 2

path = "/healthz"

}

}

resource "aws_lb_target_group_attachment" "ui" {

count = length(var.availability_zones)

target_group_arn = aws_lb_target_group.ui.arn

target_id = var.ct_ips[count.index]

port = 443

}

resource "aws_lb" "alb" {

name = "ct-alb"

internal = false

load_balancer_type = "application"

security_groups = [aws_security_group.alb_sg.id]

subnets = [for subnet in aws_subnet.ct_subnets : subnet.id]

}

variable "cert_arn" {

type = string

}

resource "aws_lb_listener" "alb" {

load_balancer_arn = aws_lb.alb.arn

port = "443"

protocol = "HTTPS"

ssl_policy = "ELBSecurityPolicy-2016-08"

certificate_arn = "${var.cert_arn}"

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

status_code = 200

}

}

}

resource "aws_lb_listener_rule" "api" {

listener_arn = aws_lb_listener.alb.arn

priority = 100

action {

type = "forward"

target_group_arn = aws_lb_target_group.api.arn

}

condition {

host_header {

values = ["api.${var.ct_domain}"]

}

}

}

resource "aws_lb_listener_rule" "registry" {

listener_arn = aws_lb_listener.alb.arn

priority = 90

action {

type = "forward"

target_group_arn = aws_lb_target_group.registry.arn

}

condition {

host_header {

values = ["registry.${var.ct_domain}"]

}

}

}

resource "aws_lb_listener_rule" "ui" {

listener_arn = aws_lb_listener.alb.arn

priority = 80

action {

type = "forward"

target_group_arn = aws_lb_target_group.ui.arn

}

condition {

host_header {

values = ["${var.ct_domain}"]

}

}

}