Application persistent storage

Introduction

Many applications need to be able to save state in a persistent way so that it survives application crash or restart. Some ways to achieve this are:

- save persistent state in an external database on a different site

- save persistent state in an external database within the same site

- save persistent state on disk

While saving persistent state in an external database on a different site may be the most robust way, it is not always feasible, or not feasible at all times due to latency and autonomy limitations. Keeping a database running locally in an edge site may be a feasible alternative, but latency requirements may still make storing the persistent state on a local disk (or other kind of locally-mounted storage) preferable, as well as the database service itself may need to be able to store data persistently.

An application may store data locally on disk in three ways:

- by writing it into container writable layer

- private to the container

- cleared when the service is upgraded or restarted

- by writing it into an ephemeral volume

- may be shared between multiple containers within the service

- the data is preserved until the application is removed or the service has to be rescheduled onto a different host

- by writing it into a persistent volume

- may be shared between multiple containers within the service

- the data is preserved after the application is removed, until the volume is explicitly removed by operator

The term volume actually means two things in this context:

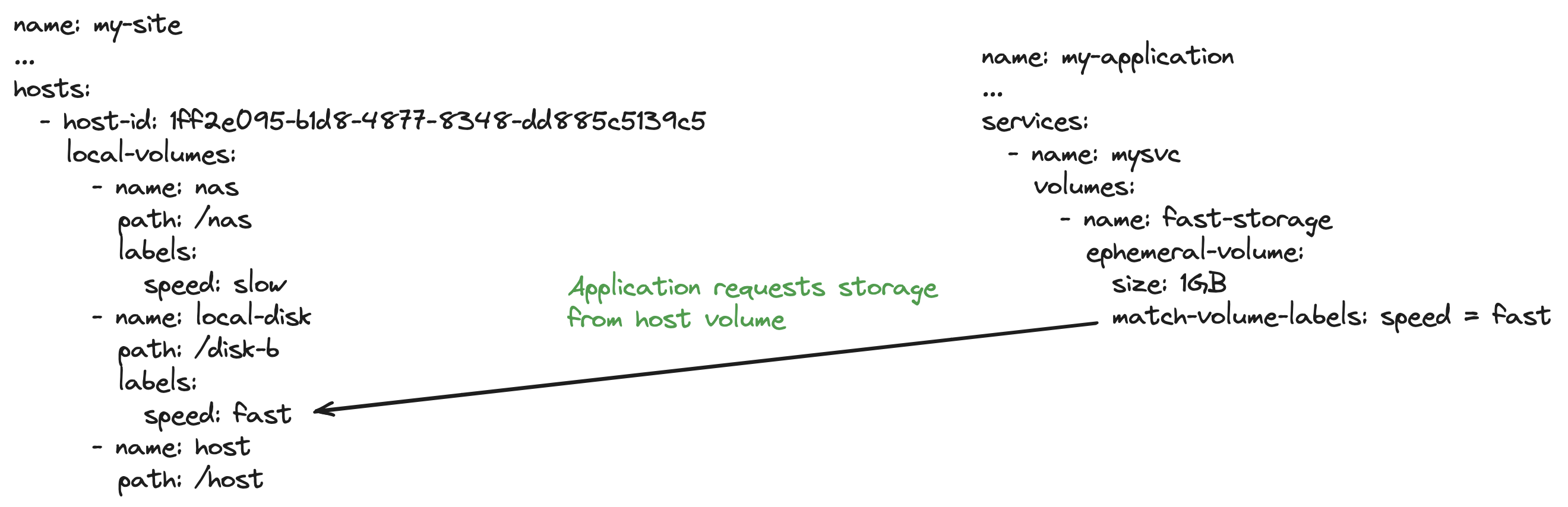

Application volumes, e.g. fast-storage in the example below. An application

may contain multiple volumes and these volumes can be allocated in

different (or the same) host volumes. By using labels you can control on what host volume an

application volume is allocated. If no volume label is specified, the first

host volume is selected.

Host volumes, e.g. /var/lib/supd/volumes/disk-b. This declares to the

system that it can create application volumes in this location. Note again, multiple

application volumes can be allocated from the same host volume.

Backups of this data is outside the scope of the Edge Enforcer and care should be taken to backup important data from the edge nodes.

Overview

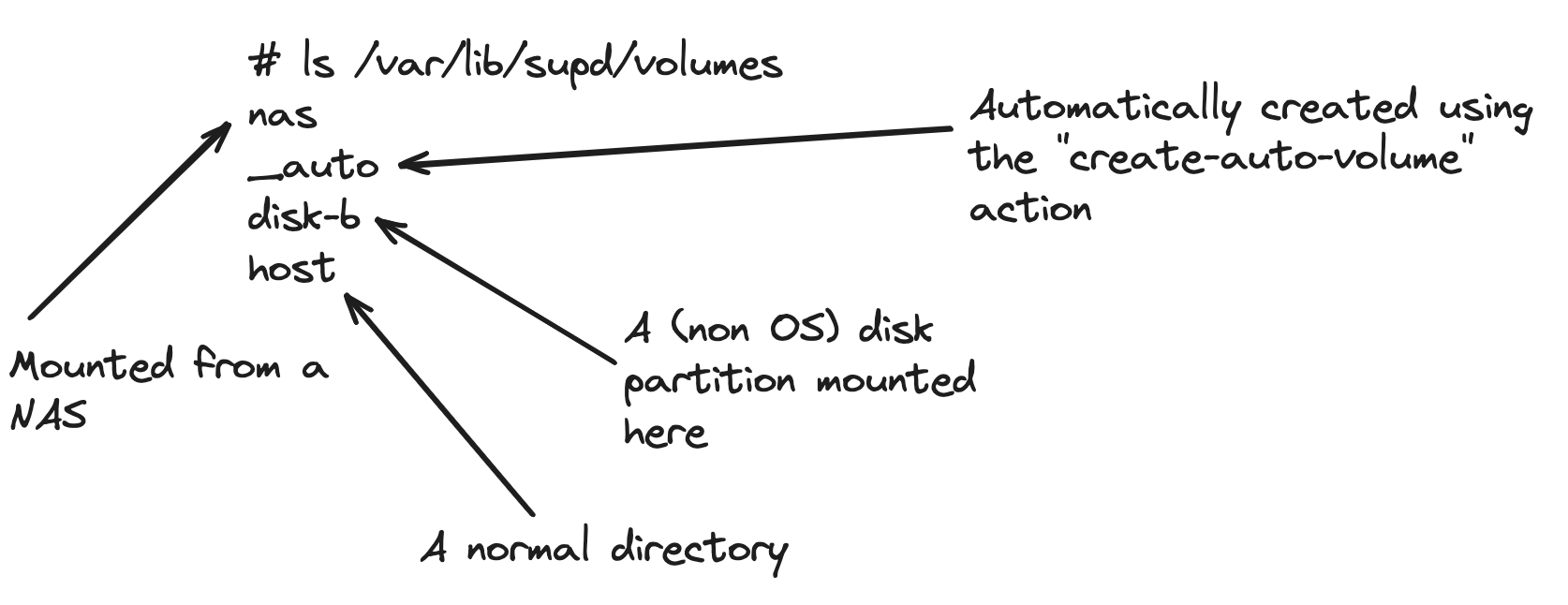

Host volumes, where applications can store data, is configured in the host OS. The different

volumes must be mounted in /var/lib/supd/volumes and configured in the site configuration.

It can be as simple as a directory created there or mounted disks like a SAN storage.

The example below is exaggerated, very rarely will multiple host volumes be needed. Note, containers often require multiple application volumes, but these can be allocated from the same host volume.

The directories (mounts) are then declared in the site configuration and applications can request storage from the

host volumes.

The _auto directory is not declared in the site config, the configuration for

the auto volume is implicit after create-auto-volume is invoked,

see how-to.

Volume labels are optional, but can be used by the application to request storage from a particular volume. If the application doesn't specify a volume label to match, the first volume (according to the order in the site config) is selected.

Container writable layer

The container filesystem consists of several read-only layers of an image, with a writable, initially empty, container layer mounted on top. While the container layer is stored on disk, it does not provide persistence across container restart or reschedule as the container is recreated from scratch upon restart. So, the container writable layer should be viewed as only temporary storage with the same lifetime as the container itself.

The writable layers for all containers are managed by Docker, and stored in a flat structure on a single filesystem. It is possible for one container to deplete disk space for other containers, hence it is highly recommended to use a filesystem with quota support and set limits both on individual containers and per host for all containers. See details in storage configuration how-to and limiting container layer size per-application.

Volumes declared in container images

A filesystem path requiring externally mounted storage may be specified by VOLUME instruction in a Dockerfile used to build an image. Such filesystem paths have to be stored outside of the container writable layer. The Avassa solution does not provide any implicit storage for such volumes, but ensures that they are explicitly specified in the application specification. In particular, it is suggested that either ephemeral volumes or persistent volumes are used to mount the storage space required by the VOLUME instruction.

If a volume required by an image is not present in the application specification, then the application fails to start. Such an error is reported at application startup time when the image is downloaded and inspected. However it is easy to detect during testing, because the set of volumes required by a particular image is static.

Note that the mount path in the application specification must exactly match the path specified in the image.

Ephemeral and persistent volumes

Basics

Ephemeral and persistent volumes are the two kinds of local storage-based volumes. Local storage here means any filesystem mounted locally on the host running the edge enforcer, which may be a filesystem storing the data on the local disk or any other kind of filesystem. Local storage-based volumes can be mounted into the containers' filesystems with a corresponding instruction in the application specification. It is the preferred way for the applications to store data on disk in a way that it survives container restart in a range of scenarios. In addition to this local storage-based volumes enable the containers to share common storage space: an ephemeral or persistent volume may be mounted by multiple containers within a single service.

A notable difference between ephemeral and persistent volumes is that the ephemeral volumes inherit the lifecycle of the service defining the volume. In contrast to this persistent volumes have their own lifecycle and are never removed without a manual action from an operator: an application owner may delete a persistent volume, or a site provider may delete a host volume the persistent volume is scheduled to. See more detailed description in scheduling of ephemeral volumes and scheduling of persistent volumes sections.

Ephemeral and persistent volumes are stored in a hierarchical directory structure managed by the Avassa solution on the host. Directories corresponding to individual ephemeral volumes are then bind-mounted into the corresponding containers' mount namespaces at a configured path.

Host volumes

Service ephemeral and persistent volumes are placed on host volumes. A host volume is a storage space defined by the site provider for keeping different services' ephemeral and persistent volumes. Multiple host volumes may be defined on a single host. Site provider has a possibility to assign labels to host volumes, for example to indicate different properties of different host volumes. An application owner may then define a host volume label match expression based on the labels defined and communicated by the site provider to choose the most appropriate host volume for an ephemeral or persistent volume. See a configuration example in storage configuration how-to.

A service volume requires a volume size to be specified, however this limit is only enforceable if the underlying filesystem supports project quota feature. Verify that the host volume is located on a filesystem with quota support as described in storage configuration how-to.

Scheduling of ephemeral volumes

When a new service instance with defined ephemeral volumes is scheduled, it needs to be placed on a certain host, and the ephemeral volumes need to be placed on host volumes within this host. The application specification may limit the choice of hosts by specifying a host label match expression for the service and the choice of host volumes by specifying the host volume label match expression. Besides matching the label match expression, the host volume needs to have enough unallocated space available for the ephemeral host volume.

If no hosts and host volume combination is able to satisfy the label match and unallocated space requirements, then the service instance is not scheduled. If multiple hosts satisfy the requirements, then a host may be chosen by considering other scheduling factors such as other resource requirements of affinity preferences.

Host volumes are configured in priority order within a single host. When multiple host volumes within a single host match the host volume label match expression, then host volumes with higher priority are preferred, as long as they satisfy the unallocated space requirement.

Once a service instance with ephemeral volumes is scheduled, then whenever the service instance may need to be rescheduled the scheduler always tries to place the service instance on the host with already existing ephemeral volumes belonging to this service instance. This is done to preserve the data in the ephemeral volumes. However this may not always be possible as described in the section Persistence caveats.

Scheduling of persistent volumes

When a persistent volume does not yet exist on the site the application is about to be deployed to, it is scheduled in the same fashion as the ephemeral volume as described in scheduling of ephemeral volumes section. However, because the persistent volume is not automatically deleted when the service is unscheduled from the site, it may happen that the persistent volume already exists on the site when a service defining it is scheduled. In such case, it must be possible to schedule the service on the same host where the persistent volume is residing (the label expression must accept this host, the volume label expression must accept the host volume the service volume is residing, there must be enough resources to accommodate this service), otherwise an error is raised and the service is not scheduled.

In order to reschedule the persistent volume it must first be deleted. In order to delete the persistent volume, it must not be in use, i.e. the service defining this persistent volume must not be scheduled on the site.

Persistence caveats

The data in an ephemeral volume instance is kept as long as its parent service instance is scheduled on the same host. The scheduler will always try to place a service instance with an existing ephemeral volume on the host where this volume is stored, but only as long as the host satisfies all of the other hard scheduling requirements. For example, if a label match expression is changed in such a way that the service instance can no longer be placed on a host where its ephemeral volume is stored, then the service will be moved and the data in the ephemeral volume deleted. Another scenario when an ephemeral volume needs to be deleted is when the host volume label match expression for the volume is changed or the size requirements for the ephemeral volume are increased in a way that it no longer fits the remaining unallocated space on the currently assigned host volume. This consideration does not, however, apply to persistent volumes as they are never rescheduled or deleted without manual intervention.

Another scenario to consider is when a host becomes inaccessible, for example loses network connectivity to other hosts within the same site, then the scheduler will eventually decide to reschedule the service instance on another host. In the ephemeral volume case this will lead to two copies of the volume instance existing on the site simultaneously, one of which will eventually be deleted. In the persistent volume case this means that the service instance cannot be rescheduled in this scenario.

It should also be noted that because an ephemeral or persistent volume instance exists in a single copy on a single host, it is prone to data loss in hardware failure scenarios.